Vision

Humans interact with computers, much as they interact with one another, using

multiple perceptual modalities to communicate their wishes easily and

intuitively. They say "show it to me there," turning their heads or pointing

with their arms to indicate where. They point a handheld device at a scene or

an object, and ask the computer to find visually similar images and to provide

information related to those images. Humans communicate with computers

implicitly, using facial expressions and gestures, without having to express

everything in words.

Computers recognize and classify features (e.g., faces and automobiles) and actions (e.g., gestures, gait, and collisions) in their field of vision. They detect patterns and object interactions (e.g., people entering a room or traffic accidents). They use this knowledge to retrieve distributed information, and they generate images that convey information easily and intuitively to users. For example, they show how a scene would look if viewed from different vantage points, illuminated differently, or changed by some event (e.g., aging or the arrival or disappearance of some object).

Approach

The visual processing system contains visual perception and visual rendering

subsystems. The visual perception subsystem recognizes and classifies objects

and actions in still and video images. It augments the spoken language

subsystem, for example, by tracking direction of gaze of participants to

determine what or whom they are looking at during a conversation, thereby

improving the overall quality of user interaction. The visual rendering

subsystem enables scenes and actions to be reconstructed in three dimensions

from a small number of sample images without an intermediate 3D model. It can

be used to provide macroscopic views of application-supplied data.

Like the spoken language subsystem, the visual subsystem is an integral part of Oxygen's infrastructure. Its components have well-defined interfaces, which enable them to interact with each other and with Oxygen's device, network, and knowledge access technologies. Like lite speech systems, lite vision systems provide user-defined visual recognition, for example, of faces and handwritings.

Object recognition

A trainable object recognition component automatically learns to

detect limited-domain objects (e.g., people or different kinds of vehicles) in

unconstrained scenes using a supervised learning technology. This learning

technology generates domain models from as little information as one or two

sample images, either supplied by applications or acquired without calibration

during operation. The component recognizes objects even if they are new to the

system or move freely in an arbitrary setting against an arbitrary background.

As people do, it adapts to objects, their physical characteristics, and their

actions, thereby learning to improve object-specific performance over time.

For high-security transactions, where face recognition is not a reliable solution, a vision-based biometrics approach (e.g., fingerprint recognition) integrates sensors in handheld devices transparently with the Oxygen privacy and security environment to obtain cryptographic keys directly from biometrics measurements.

Activity monitoring and classification

An unobtrusive, embedded vision component observes and tracks moving objects in

its field of view. It calibrates itself automatically, using tracking data

obtained from an array of cameras, to learn relationships among nearby sensors,

create rough site models, categorize activities in a variety of ways, and

recognize unusual events.

Oxygen Today

A vision-aided acoustic processing system integrates microphone arrays and

cameras in perceptive environments. A ceiling-mounted microphone array uses

slight differences in audio signals at different microphones to amplify sounds

coming from selected locations, thereby helping separate multiple audio sources

and allowing users to interact with speech recognition systems without using a

close-talking microphone. (Trevor Darrell, Vision Interface Group)

A vision-aided acoustic processing system integrates microphone arrays and

cameras in perceptive environments. A ceiling-mounted microphone array uses

slight differences in audio signals at different microphones to amplify sounds

coming from selected locations, thereby helping separate multiple audio sources

and allowing users to interact with speech recognition systems without using a

close-talking microphone. (Trevor Darrell, Vision Interface Group)

Object tracking and recognition

A real-time object tracker uses range and appearance information from a

stereo camera to recover an object's 3D rotation and translation. When

connected to a face detector, the system accurately tracks head positions,

thereby enabling applications to perceive where people are looking. (Trevor Darrell, Vision Interface Group)

A real-time object tracker uses range and appearance information from a

stereo camera to recover an object's 3D rotation and translation. When

connected to a face detector, the system accurately tracks head positions,

thereby enabling applications to perceive where people are looking. (Trevor Darrell, Vision Interface Group)

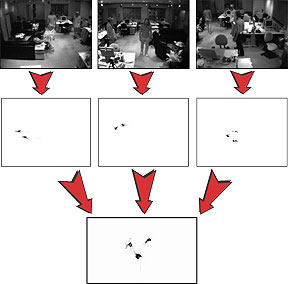

A person-tracking

system uses three camera modules, each consisting of stereo camera and a

computer, that are arranged to view an entire room and continually estimate

3D-point clouds of the objects in the room. The system clusters foreground

points into blobs that represent people, from which it can extract features

such as a person's location and posture. (Trevor Darrell, Vision Interface Group)

A person-tracking

system uses three camera modules, each consisting of stereo camera and a

computer, that are arranged to view an entire room and continually estimate

3D-point clouds of the objects in the room. The system clusters foreground

points into blobs that represent people, from which it can extract features

such as a person's location and posture. (Trevor Darrell, Vision Interface Group)

Synthetic profiles, generated as Image-Based Visual

Hulls using images from a small number of cameras in fixed locations, are

used to recognize people by their gait. In constraint-free environments, where

users move freely, this kind of view-independent identification is crucial

because no particular pose of a user can be presumed. (Trevor Darrell, Vision Interface Group)

Synthetic profiles, generated as Image-Based Visual

Hulls using images from a small number of cameras in fixed locations, are

used to recognize people by their gait. In constraint-free environments, where

users move freely, this kind of view-independent identification is crucial

because no particular pose of a user can be presumed. (Trevor Darrell, Vision Interface Group)

Oxygen's face detection and recognition system trains several classifiers on components of a face instead of training one classifier on the whole face. The system works well because it is robust against partial occlusion and because changes in patterns of small face components due to rotation in depth are less significant than changes in the whole face pattern. The system selects components automatically from a set of 3D head models based on the discriminative power of the components and their robustness against pose changes. (Tomaso Poggio , Center for Biological and Computational Learning)

The City Scanning Project

constructs textured 3D CAD models of existing urban environments, accurate to a

few centimeters, over thousands of square meters of ground area, from

navigation-annotated pictures, obtained with a novel "pose-camera". It uses a

fully automated system that scales to spatially extended, complex environments

with thousands of structures, under uncontrolled lighting conditions (i.e.,

outdoors), and in the presence of severe clutter and occlusion. (Seth Teller, Computer Graphics Group)

The City Scanning Project

constructs textured 3D CAD models of existing urban environments, accurate to a

few centimeters, over thousands of square meters of ground area, from

navigation-annotated pictures, obtained with a novel "pose-camera". It uses a

fully automated system that scales to spatially extended, complex environments

with thousands of structures, under uncontrolled lighting conditions (i.e.,

outdoors), and in the presence of severe clutter and occlusion. (Seth Teller, Computer Graphics Group)

Display technology

Efficient techniques for computational photography enhance the quality

of images with a high dynamic range, such as x-rays and high-contrast

photographs, without washing out colors or blurring details.

These techniques provide an automated alternative to labor-intensive

photographic methods, such as fill-in flash or dodging and burning in

a darkroom, that must be used for each shot or for each print.

(Fredo Durand,

Computer Graphics

Group)

Efficient techniques for computational photography enhance the quality

of images with a high dynamic range, such as x-rays and high-contrast

photographs, without washing out colors or blurring details.

These techniques provide an automated alternative to labor-intensive

photographic methods, such as fill-in flash or dodging and burning in

a darkroom, that must be used for each shot or for each print.

(Fredo Durand,

Computer Graphics

Group)

New techniques for computer animation improve upon traditional

techniques—which require artistry, skill, and careful attention

to detail—by automating parts of the animation process. A motion

sketching system enables animators to sketch, with a mouse-based

interface or with hand-gestures, how rigid bodies should move. The

sketches may be imprecise, physically infeasible, or have incorrect

timing. Nonetheless, the system computes a physically plausible

motion that best fits the sketch. (Jovan Popovic, Computer Graphics

Group)

New techniques for computer animation improve upon traditional

techniques—which require artistry, skill, and careful attention

to detail—by automating parts of the animation process. A motion

sketching system enables animators to sketch, with a mouse-based

interface or with hand-gestures, how rigid bodies should move. The

sketches may be imprecise, physically infeasible, or have incorrect

timing. Nonetheless, the system computes a physically plausible

motion that best fits the sketch. (Jovan Popovic, Computer Graphics

Group)

Efficient algorithms for constructing Image-Based Visual Hulls

allow rendering synthetic textured views of objects from arbitrary viewpoints.

A visual hull is a geometric shape obtained using silhouettes of an object as

seen from a number of views. Each silhouette is extruded creating a cone-like

volume that bounds the extent of the object. The intersection of these volumes

results in a visual hull, which approximates the object and is shaded using the

reference images as textures. (Leonard McMillan, former member of the Computer Graphics Group)

Efficient algorithms for constructing Image-Based Visual Hulls

allow rendering synthetic textured views of objects from arbitrary viewpoints.

A visual hull is a geometric shape obtained using silhouettes of an object as

seen from a number of views. Each silhouette is extruded creating a cone-like

volume that bounds the extent of the object. The intersection of these volumes

results in a visual hull, which approximates the object and is shaded using the

reference images as textures. (Leonard McMillan, former member of the Computer Graphics Group)

MikeTalk is a photorealistic text-to-audiovisual speech synthesizer.

MikeTalk processes a video of a human subject uttering a predetermined speech

corpus to learn how to synthesize the subject's mouth for arbitrary utterances

that may not be in the original video. MikeTalk's output is videorealistic in

the sense that it looks like a video camera recording of the subject, complete

with natural head and eye movements. (Tomaso Poggio, Center

for Biological and Computational Learning)

MikeTalk is a photorealistic text-to-audiovisual speech synthesizer.

MikeTalk processes a video of a human subject uttering a predetermined speech

corpus to learn how to synthesize the subject's mouth for arbitrary utterances

that may not be in the original video. MikeTalk's output is videorealistic in

the sense that it looks like a video camera recording of the subject, complete

with natural head and eye movements. (Tomaso Poggio, Center

for Biological and Computational Learning)